Project HoneyBadger, the Picoservices Netflix Stack

How we put the Netflix stack (the Gallery / Lolomo) on a few old Android phones.

Hello again! Another two Netflix hackdays have come and gone, and people asked once again a simple “how did you do it?” or a more incredulous, “you couldn’t have possibly put the Netflix server stack on a couple of Android phones!” Well, my first picks for a hacking dream team got back together with Alex and Carenina, but this time we were joined by Michael and we managed to once again bewilder the masses. Join me again for a brief tale of woes, frustration, and anguish, but finally a shocked audience and our first hackday award!

The TL;dr on what we did: we looked at how Netflix clients talk to the servers, created a bunch of smaller footprint microservices (picoservices!) that showed approximately real workloads on a few phones (keeping the same sort of organization), extracted some precomputed workloads, and then linked up a number of real TV devices to run their browse sessions off of the phones instead of the real server stack. How we did it and why is below.

Inception

As is becoming commonplace, HoneyBadger began with a challenge. After debating a few of the finer points of our future server to server RPC with Michael and a few of our API specialists, I met with our head of personalization, Jon Sanders, to see what sort of appetite some of our recommendations wizards might have for a more efficient model. After disclosing to him that our current client / server interactions were a bit on the chatty side, he asked me in his usual straightforward manner, “Guy, don’t you think we should start with that first?”

I couldn’t disagree, so I began shopping the idea around. Having the freedom to work on different things doesn’t mean you’ll have instant agreement on where they go in the business priorities.

The comments came back - I needed to make a stronger case, there were just too many unknowns of what an entirely different interaction model would look like. The conversations were a bit different than the first time I’d mentioned NESFlix / DarNES, this time people carefully didn’t say that it couldn’t be done. I did, however challenge the establishment that we could make things so much more efficient, that we could run at least a hundred Netflix subscribers off my phone.

I’m a fan of the now slightly older Samsung Galaxy S3. Thanks to the CyanogenMod project, the phone is very well understood, and though building a working Android Operating System from source isn’t a trivial task, the guides and forums were helpful.

Unfortunately, my buildbox was overheating due mostly to the dustiness in my building (and the intensity of the mka build workload), so first I had to clean the machine out, apply some Arctic Silver 5 Thermal Gel, and get a working kernel on the device so that I could customize it a bit:

Success, I had a working d2lte build. I picked up a few more SGH-I747 phones on eBay, talked to Alex and Carenina, and we were off.

Slicing up the Project

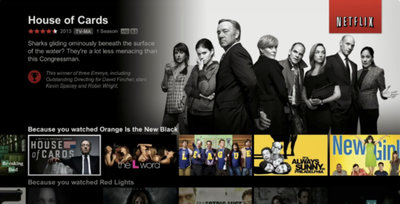

Among other things, Netflix is famous for moving over to the cloud. The ability for engineers to quickly provision and spin up servers helps engineering to never get bogged down waiting for resource allocations and teams move at their own pace on software development. Packing the entire Netflix / Amazon cloud into a few phones wasn’t going to fit into the scope of a hackday entry, so we decided to focus on a busy part of the client / cloud interactions, namely the Gallery, where you go when you’re browsing around titles.

The Gallery changes shape all the time as we relentlessly test changes, but we thought it was worth pushing ourselves to see if we could serve it from commodity phone hardware. The prediction pipelines and a large array of the usual microservices were scoped down, and we focused on just the elements that were required to support the browse experience.

A minimal set of “picoservices” (running on phones or otherwise), would need to at least:

- Separately serve the personalized recommendations for a subset of users.

- Show some programmatic workload being applied to the recommendations.

- Make sure all the needed metadata was there to build the information in the top left corner.

- Contain the static assets needed for the experience - boxart, large artwork, any spritesheets, and the customized UI.

I began repacking the JSON formatted personalization data initially as a Flatbuffer and later as a hand-packed Plain Old C Object (POCO, actually a carefully aligned struct), while Alex set to eking out as much RPS as he could get from the limited hardware. We still needed someone to work with the packed binary data in our javascript UI who understood how the data was flowing in the Falcor JsonGraph model, so we tapped Michael Paulson for the task.

Implementation - Pre-Work

CyanogenMod is an alternative hackable operating system that you can flash onto a large number of Android smartphones, including the variant of the S3 that I had. I learned the basics of Android.mk (android makefiles), a little about what was and wasn’t supported in their custom version of libc, and ported thttpd, h2o, lwan, and a few other webservers. We needed a fast data store with similar characteristics to EVCache to back the servers, so I ported redis and memcached as well, although memcached came in a bit late due to some competing project issues.

While Michael was busy finding all of the precomputed data we needed in our existing server stack, I pulled 5MB of English text out of the wikipedia and began pretending that it was movie metadata to unblock Alex. Alex loaded up the data into redis, wrote a hiredis C endpoint for thttpd on a virtualbox host, and began load testing the webservers to see what sort of real throughput we could do. Our proposition was simple - if we could make the payload small enough, it should be drastically more efficient to serve a binary blob with the prebuilt data the client side falcor cache would need all in the first request.

Michael finished up the groovy script to collect the precomputed data, handing it back off to me. Since I wasn’t sure at that point if we’d need to get live access to recomputed personalization data, I wrote a quick and dirty program in C that parsed the resulting JSON (using the excellent kson functions from klib) and repacked it as a POCO blob. My earlier experiments with FlatBuffers showed that without the ability to specify a fixed run of bytes (such as ubyte[16] for a UUID), we might run into trouble on the low powered TV devices, so I re-read ESR’s The Lost Art of C Structure Packing, popped open a hex editor (dhex), and went to work. We also had help from Oliver, a talented feline programmer in his own right:

The Day of the Hack

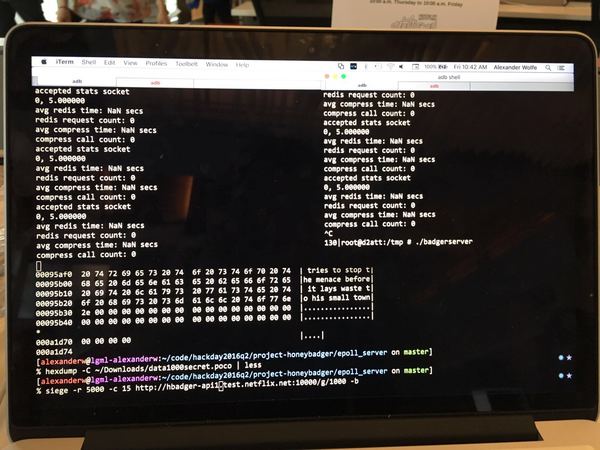

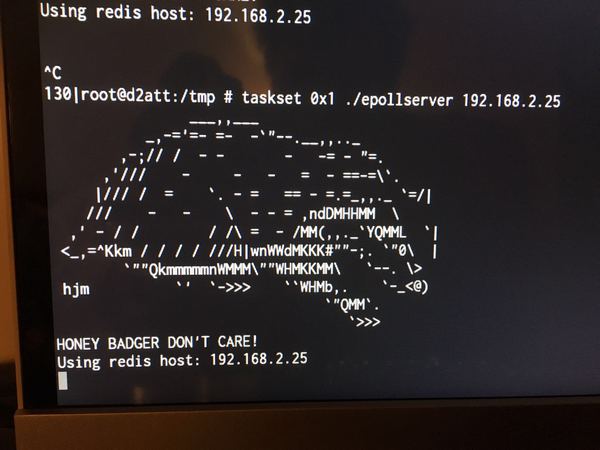

Our payload was 150KB after compressing with bzip2, but thttpd just wouldn’t go above 4 or 5 RPS (the scaled equivalent of about 280 - 350RPS). Alex had eventually written a standalone server, dubbed badgerserver, to make sure we were getting everything we could out of epoll that brought us up to about 7 or 8 RPS (scaled: 490 - 560 RPS). Michael was confident that I was off by a few bytes on the payload format, since after he pulled apart my POCOs, and one of my overly aggressive optimizations, to use only one byte to store all movie dates since they all had to be newer than 1895, was leading to an off-by-4 problem in the UI. Christiane and Justin had put together a rack of TVs with a couple of Rokus, a UHD SmartTV, a Playstation 4 and a Faraday cage so we could just wheel the thing on and off the stage easily, but it was causing onlookers to gather around our rig.

The usual insanity of hackday had gone through its general cycles - in the beginning it was all fun and games, by the time the pizza and beer the organizers provided for dinner arrived a number of people were gone, by 10 or 11 the numbers were whittling down, but it wasn’t until 3AM that we decided that we were in trouble. We still didn’t have the video submission ready on our hack for the committee to review, and badgerserver wasn’t playing nice with the older TV devices (but for some reason, it was working fine with the newer ones). Carenina was constantly in and out, and she pressed hard on our message, pushing me to tell and retell the story next to a whiteboard several times, but I could tell from her disappointment that I was overcomplicating the discussion. She promised slides, but morale was definitely at a general low.

Ever since I lived in Boston, I’ve said that nothing good ever happens after πAM, and the team generally decided that it would be better to have a few hours of sleep before picking back up ahead of the presentations in the morning.

“GUY!” “Huh? Oh, sorry Alex. What was I doing?” “Michael needs the fixes on POCO data.” “Yeah dude,” Michael added, “I’m pretty sure you’re off by 2 bytes after the row header.” “Right, on it.”

It was 9AM, and my attention was definitely wandering.

“I’ve got it,” said Alex, unusually excited. “What,” I mumbled inquisitively. “It’s a missing colon! The Content-Length header doesn’t have a colon after it! The older TVs seem to be very upset about it.”

The perils of implementing a webserver the day of a hackday. Teams were dropping out like flies at this point, as they decided enough was enough. There was just 3 hours left before the presentations started, but it looked like we might have turned a corner.

“And that last fix worked Guy, whatever you did,” Michael chimed in. “Great. I’ll go back to the Easter Egg.”

I had connected both sides of the ssh tunnels so that the gdbserver on my phone was talking to the buildbox back in my apartment, but gdb was unhappy about some of the stack information it was getting. I had never debugged a frame between a debugger and a device 15 miles apart, but there it was. Oh wait, that was last night. Is it really already 10AM?

“Guy, the slides are ready. The lines. I love the lines.” “We have lines Carenina?” “Did you guys sleep at all last night?” “Guy’s gone poetic,” Alex replied, “which usually means sleep must come soon.” “I’m fine.. just.. coffee..” She smiled. “I’ll finish these up, I just have to run to a meeting first.” “K.”

Alex had wired up part of the load test using siege to some of the badgerservers running on device:

He had dialed back the server UI to only poll every 5 seconds for the activity load, to avoid having the server UI cut too much into the available CPU. After some heavy analysis, we realized that we were IO bound anyway, so it didn’t really matter, but might as well be on the safe side.

Things were certainly looking better than last night, and whatever else happened, at least we’d picked up a few of the cool looking T-shirts. I rebuilt the CDN files, while Michael put the finishing touches on part of the UI and was walking a few onlookers through what they were seeing on the rack. We’d moved the CDN to a raspberry pi 2B at that point, which I’d actually bought for DarNES, since the wifi had kept acting up with the large number of device users near the theater.

But then it was 12:05PM, and the presentations had already begun. We scrapped the rest of the Easter Egg, and scrambled to delicately walk the rack through the building to the backstage area.

Game Time

A lot more people were trying for hardware hacks than in previous hackday events. It was ambitious, but the downside is that hardware tends to fail a lot more often than software given the tough time constraints around getting something reliably working, so the schedule had cleared up a lot. We had been seated 13th, on Friday the 13th, which wasn’t doing my superstitions any favors, but I brushed it off - we had never won a hackday anyway, so bad luck might as well have at it anyway. I promised them glory, not a win.

The power teams were out in full force. Micah’s side decided their actual globe-from-a-projecter-in-a-light-bulb-of-live-global-data was too delicate, while the Bogdan-Evan collective had a solid interactive-movie-object-throwing-detection-game working pretty well. Joey’s team kicked things off with a great VR-Netflix-on-Vive Virtual Video Store hack, and we kept worrying at the stage wifi setup. It was a strong showing with about 200 contenders and 80 hacks.

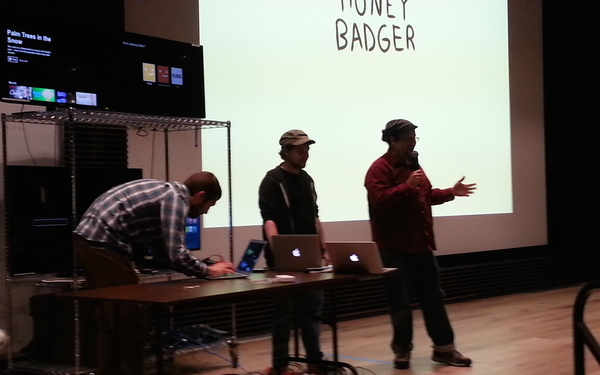

I hadn’t seen the slides yet, and we were still setting up when the previous team finished. Alex prompted me to tell the audience what we had done, and I began speed talking my way through the setup:

The shocking news came later when we won. We’d put Netflix on the original Nintendo, we’d shown streaming over a Kazakh cell phone provider on 3G from a field in the middle of nowhere, but the hack that won was to reduce the server stack down to a couple of cell phones. Around 3PM at the award ceremony, as I was walking back from picking up my friend Lyle’s award, I mumbled in a stage whisper towards the marble award, “Closest I’m ever gonna get to one of these!” I didn’t even believe my teammates when they told me Neil had just announced our names for the Infrastructure Award. Wrock on!

A Word of Thanks

To my dream team picks:

Alex Wolfe Carenina Garcia Motion Michael Paulson

I’m sure if we wanted to go to the moon, we’d figure out a way.

A special thanks goes to the AOSP and the CyanogenMod projects, and to the large number of software projects that we used / ported / tuned:

| thttpd | redis | memcached |

| siege | iperf | h2o |

| nginx | ab | dhex / xxd |

| android studio | curl | flatbuffers |

| epoll / perf | lwan | zlib |

| bzip2 (csail) | raspbian | libevent |

| libuv | klib | libh2o |

| libhiredis | linenoise | brotli |

| cloexec | golombset | libyrmcds |

| neverbleed | picohttpparser | yaml |

| yoml | gentoo | bitbucket |

Thanks everybody, and until next time, enjoy and keep on open sourcing!

– Guy

A few extras:

An adb shell showing you the actual running server:

A piece of a POCO file hexdump: