Autotoday - AI to auto update the !today command

How I learned to forget about setting the !today command text for live coding streams

TL;dr:

- Use streamlink to capture the live twitch stream (HLS / MPEG-TS)

- Demux the audio and transcode to the ASR trained model format (f32-16KHz-mono)

- Run it through the Speech To Text (ASR) (wav2vec2 / whisper)

- Fine tune BART to summarize what’s said to a single sentence

- Set the

!todaycommand

Background

For the last few months, I’ve been doing a daily one hour live coding stream on Twitch. That was a pretty hefty jump from my previous streaming attempts which focused on longer duration streams (typically 3-5 hours) less frequently, since I’d fallen off from 2 to 3 times to a lot less, but as one of the only live coders streaming directly from linux/ubuntu, the stacked costs (timewise) of tasks to spin up a stream was taking longer than most of my streams would last. Given that, my focus has been on removing the friction to just get in and get going with my setup, and a consistent miss for starting up quickly is remembering to set the !today command.

If you’re not familiar with twitch, the streamer is usually running a live video / audio stream to twitch which gets reflected to any number of viewers (with the twitch-low-latency setting, this leads to about 5-10 seconds of lag from when you say something to when the viewers will see it), and the viewers have access to a text based (IRC derivative) chat, which the streamer will often reply to verbally. There’s any number of streamers active at once, and viewers will jump in and out of streams as they fancy. Unfortunately, that means a lot of people are bouncing in and out of your stream without any prior context on what you’re doing (other than a often out of date stream title you can set). The generally accepted solution for this is to add a chat bot that helps with stream management, providing an async avenue for viewers to get details on the stream, and one of the most commonly available commands for that is the !today command, which usually has the most recent copy of whatever the stream is about.

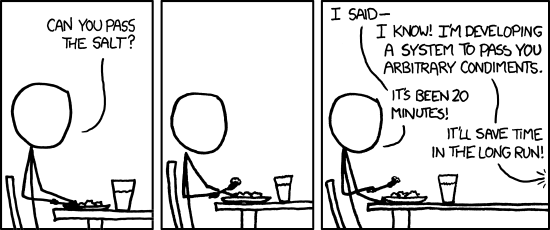

So why miss the opportunity for a totally overengineered piece of tech to automatically set the !today command?

After viewers had run the !today command 3 or 4 times when I forgot to set it, I figured it was time to employ some AI to do the dirty work and generalize the solution. The basic idea was to take the stream audio, run it through speech to text, then to do a short summary and set the !today text with that.

Getting the stream

One option would be to share the mic audio between obs and the autotoday system, but given the past issues I’ve had with obs and alsa + pulsewire, and the brittle past of obs plugins, I decided to build a more general solution and just have autotoday listen in on the stream. This also gives the option to easily split the autotoday hardware from the streamer setup, or run it for multiple streamers if there’s some community interest.

Since most of the AI systems were written with python for the composition framework, I decided to try out a few python based streaming clients. Eventually I landed on streamlink, which was able to take the latest twitch stream (HLS) and output the MPEG-TS frames.

All of this was built via live coding on stream, so there was plenty of commentary in the chat regarding every technical decision. I spent a little time trying to work with streamlink’s ffmpeg options, hoping that all transcoding could be done directly in a single ffmpeg binding, outputting what I needed for the ASR and later steps in the pipeline. What I didn’t realize at the time was that streamlink doesn’t run ffmpeg by default (at least in the latest version I was using), and decided to spin up a separate ffmpeg binding to handle the work.

Transcoding

Not seeing a native C based binding, I tried out PyAV and python-ffmpeg, but both didn’t work for me (PyAV claims direct ffmpeg/libAV support, but I couldn’t find the right options to get a live streaming pipeline working without writing out the file to disk first, which may be a libAV issue; python-ffmpeg mostly ran into the same issues, but was more of a loose subprocess binding, and its preferred options mostly were interfering with what I wanted ffmpeg to do).

After checking the ffmpeg pipe protocol, I came to realize that despite feeding in an MPEG-TS stream, the process was still blocking. I tried a few more framing formats and transcoding options, but didn’t see an obvious way to get it to unblock (short of, once again, writing out the file and reading it back in). While debugging, I wrote out the audio to a file and then transcoded that, which worked fine. Since I didn’t want to spend a lot of stream time exploring esoteric ffmpeg options, I opted instead to use a unix fifo / named pipe.

A fifo is a unix trick that’s been around for a while to handle process to process communication in a semi-decoupled fashion (and probably deserves a separate blog post to do it justice). Rather than directly piping one command’s output to another command’s input, a fifo allows one process to write to a file and another process to read from that same file, however the kernel doesn’t actually implement this as a real file (though for design reasons and the “everything is a file” unix philosophy, the fifo node does appear on the filesystem in a path, but the data in the fifo doesn’t actually need to hit disk). Network sockets (specifically unix domain sockets) provide a different interface and better decoupling at increased complexity, but at the time I thought it’d make for a messier live stream, so I went with the fifo implementation (set as non-blocking), which worked acceptably.

Reading the code from the model example, I could see that the model expected the audio to be presented as a 30 second numpy array chunk of float32 numbers, with 16,000 numbers per second of audio, with only one audio channel (mono), and the bytes needed to be sent in little endian (LSB) byte order. If the model pipeline code sees byte based data, it starts up a separate ffmpeg stream (that also would have blocked for the same reason as above) and converts it to a float32-16KHz-mono-LE byte stream and then via numpy.from_buffer to a float32 dtype. I applied these format changes to keep everything in one transcode before loading the data back to numpy. While there’s a lot of unnecessary memory copies from this pipeline, I figured we should get a working version first before optimizing or trying to keep all the data on the GPU where inspection tools are a bit more limited.

Automatic Speech Recognition (ASR)

Before moving to the final transcoding configuration above, I started with a contained basic example that isolated just the ASR piece. Initially I built with the wav2vec and wav2vec2 models from Facebook, which were close to the state of the art at the time of their release. These models only identify phonemes (loosely sounds of syllables, not whole words), outputting reasonable letters for the given sounds, with n-gram based filtering applied to stick to the sorts of letter sequences that occur in the languages they match against, without case or punctuation (think: “THIS IS WHAT WAS SAID” not “This is what was said”). The model also presents useful word embedding information, which I’ll likely discuss in a later blog. It’s the job of the implementation to then take these n-grams and apply language model post-processing to move to the type of transcription we might expect from a fully developed ASR. I was impressed with the result, but applying language modeling and dressing up full sentence structure and punctuation was still going to be a lot of work.

At the suggestion of one of the twitch audience, I also looked at openai’s whisper model, which is several ASR generations newer than the wav2vec2 model. The particular whisper model I reviewed included built in Connectionist Temporal Classification, CTC, a method of deduping successive phonemes based on arbitrary speech speeds, like the wav2vec2 authors intended, but also used a seq2seq based language generation model that performed the language modeling and punctuation tasks. Since TNSTAAFL, this model is a lot bigger and compute intensive than the wav2vec2 one, but the output came in more or less finished transcription format (think: “This is what I said."), which seemed like a solid trade for live coding.

AI Autosummarization

Now that we had a transcription that looked similar to what a human would have written, it was time to explore summarization techniques. Broadly, two major types of autosummarization techniques have been studied by researchers at the moment - extractive and abstractive. Extractive summaries attempt to find the most important words, terms, or even sentences from a given text and present them as features for further refinement as the summary. Abstractive techniques attempt to build a shorter summary text directly from the source text, without necessarily using any of the exact language from the text (more of an abstract summary, written in its own words). Having been interested in the Bidirectional Encoder Representation from Transformers, BERT models in earlier projects, I was pleased to discover a group of researchers had used BERT in combination with a seq2seq / GPT encoder/decoder to build an a text autoencoder that could handle noisy inputs called BART, what they call “a denoising autoencoder”, aimed at both generating text from a noisy source in another language and general comprehension based on find the important concepts being discussed in the text.

BART itself needs to be fine tuned for a particular use, or less formally, you need to teach it what to do with the comprehension that it builds up of the input text. In one set of tasks, BART was fine tuned on the CNN/DM corpus, which is a large set of CNN news articles provided with shorter related summaries. Using that, we can treat the source news articles as the original language while the shorter summaries as another language (instead of English to Cantonese, let’s do English to Short Form Summary English). Procuring a previously fine tuned version of BART, I pointed it at some earlier text transcripts I’d done of previously completed streams, and we were in the AI autosummary business!

Unfortunately, the summaries were written as very long paragraphs. Not quite what we needed for a short sentence fragment !today summary command, but this was much closer. Finding these things and coding it live under an audience in an hour does add its own challenges to researching available options.

Taking a moment after stream to look around a bit, I found the Extreme Summarization, XSum set, a corpus developed on BBC news articles that also contained a very short one line description. The paper looked like exactly what I needed (although now I may be introducing British orthography to the new program! or programme?) While I haven’t had a chance to apply the XSum fine tuned version yet, I’m looking forward to doing some better fine tuning in 2024!

Setting the Command text

Somebot, the bot that I wrote on much earlier live coding streams, presents a number of integration opportunities for live data sets. While I have yet to finish the end to end version of the full !today autosummary command, I expect we’ll start with a simple file based hack (it’s sufficient to start by just setting the text via a well-known-temporary-file-location and reading it at invocation time with all the related gotchas), and move on to a more sophisticated and loosely coupled update mechanism in future live streams.

Shortly in to 2024, I think we’ll need to update this blog with how these last few tasks go, but for the moment, I’m looking forward to the future streams as we wrap this project up, and would like to thank all of my viewers and supporters for letting me take this journey through a heavily overengineered way of setting a simple summary. As lastmiles said in my last live coding stream of 2023, “36,000 lines of code to set the TODAY”, which works for an extreme summary for me.